by Lucas Nolan, Breitbart:

Recent research has uncovered a worrying issue — AI chatbots, like ChatGPT, have the capability to discern sensitive personal information about individuals through casual conversations, like an evil cybernetic version of Sherlock Holmes.

Wired reports that AI chatbots have emerged as intelligent conversationalists, capable of engaging users in seemingly meaningful and humanlike interactions. However, beneath the surface of casual conversation lurks a concerning capability. New research spearheaded by computer science experts has revealed that chatbots, armed with sophisticated language models, can subtly extract a wealth of personal information from users, even in the midst of the most mundane conversations. In other words, AI can determine all sorts of sensitive facts about you based on simple conversations, which could then be used for intrusive advertising or even worse purposes if the information falls into the wrong hands.

SOTN Editor’s Note: While the vast majority of terrorist attacks in and around the Zionist State of Israel are perpetrated by the MOSSAD-CIA-MI6 terror group, there are instances when the highly oppressed and abused Palestinians lash out in extreme desperation. However, the recent Hamas ‘surprise attacks’ were obviously carried out under the direction the IDF and Israeli intelligence services.

SOTN Editor’s Note: While the vast majority of terrorist attacks in and around the Zionist State of Israel are perpetrated by the MOSSAD-CIA-MI6 terror group, there are instances when the highly oppressed and abused Palestinians lash out in extreme desperation. However, the recent Hamas ‘surprise attacks’ were obviously carried out under the direction the IDF and Israeli intelligence services.

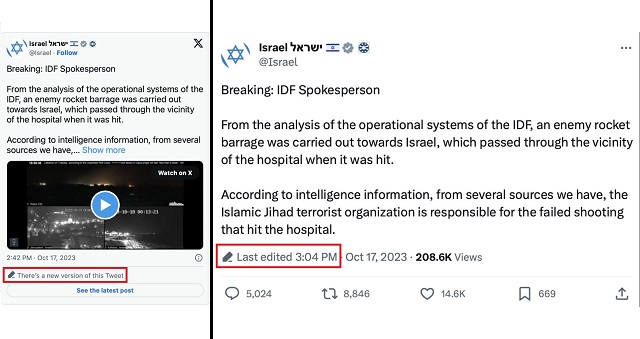

The Israeli government on Tuesday deleted video they falsely claimed showed the Palestinian Islamic Jihad, not Israel, was responsible for

The Israeli government on Tuesday deleted video they falsely claimed showed the Palestinian Islamic Jihad, not Israel, was responsible for