by Ramiro Romani, Unlimited Hangout:

The internet is about to change. In many countries, there’s currently a coordinated legislative push to effectively outlaw encryption of user uploaded content under the guise of protecting children. This means websites or internet services (messaging apps, email, etc.) could be held criminally or civilly liable if someone used it to upload abusive material. If these bills become law, people like myself who help supply private communication services could be penalized or put into prison for simply protecting the privacy of our users. In fact, anyone who runs a website with user-uploaded content could be punished the same way. In today’s article, I’ll show you why these bills not only fail at protecting children, but also put the internet as we know it in jeopardy, as well as why we should question the organizations behind the push.

TRUTH LIVES on at https://sgtreport.tv/

Let’s quickly recap some of the legislation.

European Union

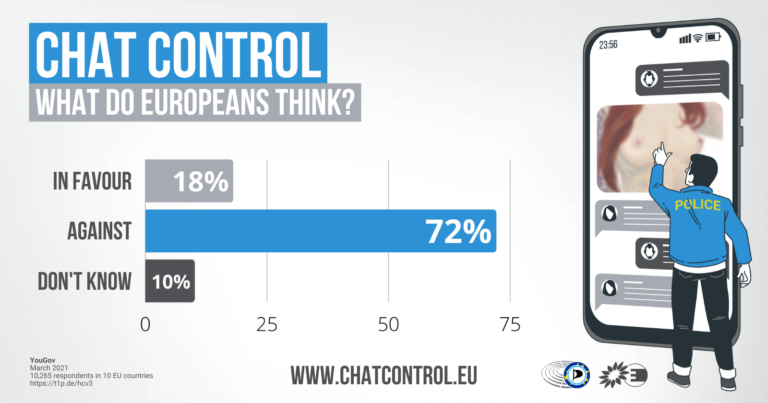

– Chat Control: would require internet services (Email, chat, storage) to scan all messages and content and report flagged content to the EU. This would require that every internet based service scans everything uploaded to it, even if it’s end-to-end encrypted. Content would be analyzed using machine learning (i.e. AI) and matches would automatically be reported to the police. This is awaiting a vote from the EU LIBE committee.

United Kingdom

–The Online Safety Act 2023: would require user service providers to enforce age limits and checks, remove legal but harmful content for children, and require scanning photos for materials related to child sexual abuse & exploitation, as well as terrorism. It would require providers to be able to identify these types of materials in private communications and to take down that content. This means providers would need visibility into messaging, even those messages are end-to-end encrypted. End to end encrypted messaging providers such as WhatsApp, Viber, Signal, and Element have indicated in an open letter that surveillance on of this type simply isn’t possible without breaking end to end encryption entirely, and have threatened to leave the UK if the bill was passed & enforced without the offending Clause 122. This bill was recently passed by parliament unchanged and will become enforceable in 2024.

United States

– The EARN IT Act 2023: would allow US states to hold websites criminally liable for not scanning user uploaded content for CSAM (child sexual abuse material). This would effectively ban end to end encryption. This bill has 22 cosponsors and is awaiting an order to report to the Senate.

– The STOP CSAM Act 2023 (Full Text): would allow victims who suffered abuse or exploitation as children to sue any website that hosted pictures of the exploitation or abuse “recklessly”, e.g. if your website was not automatically scanning uploads. Websites are already required by law to remove CSAM if made aware of it, but this would require providers to scan all files uploaded. This bill has 4 cosponsors, and is awaiting an order to report to the Senate.

– Kids Online Safety Act (KOSA): would require platforms to verify the ages of its visitors and filter content promoting self-harm, suicide, eating disorders, and sexual exploitation. This would inherently require an age verification system for all users and transparency into content algorithms, including data sharing with third parties. This bill has 47 bi-partisan cosponsors and is awaiting an order to report to the Senate.

Its important to note that the language in these bills and the definition for “service providers” extends to any website or online property that has user-uploaded content. This could be as simple as a blog that allows comments, or a site that allows file uploads. It could be a message board or chatroom, literally anything on the internet that has two-way communication. Most websites are operated by everyday people – not huge tech companies. They have neither the resources or ability to implement scanning on their websites under threat of fine or imprisonment. They would risk operating in violation or be forced to shut down their website. This means your favorite independent media site, hobbyist forum, or random message board could disappear. These bills would crumble the internet as we know it and centralize it further for the benefit of Big Tech who are rapidly expanding the surveillance agenda.

We must pause and ask ourselves, is this effort to ramp up surveillance really about protecting children?

How do companies currently deal with CSAM?

In the United States, tracking CSAM is recognized as a joint effort between ESPs (Electronic Service Providers) like Google, and the National Center for Missing & Exploited Children (NCMEC) a private non-profit established by Congress in 1984 and primarily funded by the United States Department of Justice. Unlimited Hangout has previously reported on the NCMEC and its ties to figures such as Hillary Clinton and intelligence-funded NGOs such as Thorn. They also receive corporate contributions from big names such as Adobe, Disney, Google, Meta, Microsoft, Palantir, Ring Doorbell, Verizon, and Zoom.

Read More @ UnlimitedHangout.com