by Chris Morrison, Daily Sceptic:

If you cannot make a model to predict the outcome of the next draw from a lottery ball machine, you are unable to make a model to predict the future of the climate, suggests former computer modeller Greg Chapman, in a recent essay in Quadrant. Chapman holds a PhD in physics and notes that the climate system is chaotic, which means “any model will be a poor predictor of the future”. A lottery ball machine, he observes, “is a comparatively much simpler and smaller interacting system”.

TRUTH LIVES on at https://sgtreport.tv/

Most climate models run hot, a polite term for endless failed predictions of runaway global warming. If this was a “real scientific process’” argues Chapman, the hottest two thirds of the models would be rejected by the International Panel for Climate Change (IPCC). If that happened, he continues, there would be outrage amongst the climate scientists community, especially from the rejected teams, “due to their subsequent loss of funding”. More importantly, he added, “the so-called 97% consensus would instantly evaporate”. Once the hottest models were rejected, the temperature rise to 2100 would be 1.5°C since pre-industrial times, mostly due to natural warming. “There would be no panic, and the gravy train would end,” he said

As COP27 enters its second week, the Roger Hallam-grade hysteria – the intelligence-insulting ‘highway to hell’ narrative – continues to be ramped up. Invariably behind all of these claims is a climate model or a corrupt, adjusted surface temperature database. In a recent essay also published in Quadrant, the geologist Professor Ian Plimer notes that COP27 is “the biggest public policy disaster in a lifetime”. In a blistering attack on climate extremism, he writes:

We are reaping the rewards of 50 years of dumbing down education, politicised poor science, a green public service, tampering with the primary temperature data record and the dismissal of common sense as extreme right-wing politics. There has been a deliberate attempt to frighten poorly-educated young people about a hypothetical climate emergency by the mainstream media, uncritically acting as stenographers for green activists.

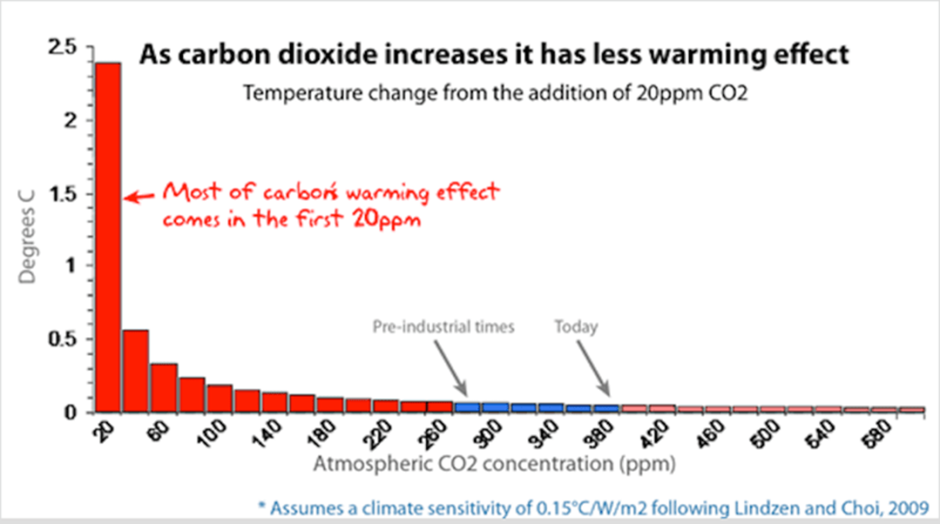

In his detailed essay, Chapman explains that all the forecasts of global warming arise from the “black box” of climate models. If the amount of warming was calculated from the “simple, well known relationship between CO2 and solar energy spectrum absorption”, it would only be 0.5°C if the gas doubled in the atmosphere. This is due to the logarithmic nature of the relationship.

This hypothesis around the ‘saturation’ of greenhouses gases is contentious, but it does provide a more credible explanation of the relationship between CO2 and temperatures observed throughout the past. Levels of CO2 have been 10-15 times higher in some geological periods, and the Earth has not turned into a fireball.

Chapman goes into detail about how climate models work, and a full explanation is available here. Put simply, the Earth is divided into a grid of cells from the bottom of the ocean to the top of the atmosphere. The first problem he identifies is that the cells are large at 100×100 km2. Within such a large area, component properties such as temperature, pressure, solids, liquids and vapour are assumed to be uniform, whereas there is considerable atmospheric variation over such distances. The resolution is constrained by super-computing power, but an “unavoidable error” is introduced, says Chapman, before they start.

Determining the component properties is the next minefield and lack of data for most areas of the Earth and none for the oceans “should be a major cause for concern”. Once running, some of the changes between cells can be calculated according to the laws of thermodynamics and fluid mechanics, but many processes such as impacts of cloud and aerosols are assigned. Climate modellers have been known to describe this activity as an “art”. Most of these processes are poorly understood, and further error is introduced.

Another major problem occurs due to the non-linear and chaotic nature of the atmosphere. The model is stuffed full of assumptions and averaged guesses. Computer models in other fields typically begin in a static ‘steady state’ in preparation for start-up. However, Chapman notes: “There is never a steady state point in time for the climate, so it’s impossible to validate climate models on initialisation.” Finally, despite all the flaws, climate modellers try to ‘tune’ their results to match historical trends. Chapman gives this adjustment process short shrift. All the uncertainties mean there is no unique match. There is an “almost infinite” number of way to match history. The uncharitable might argue that it is a waste of time, but of course suitable scary figures are in demand to push the command-and-control Net Zero agenda.

It is for these reasons that the authors of the World Climate Declaration, stating that there is no climate emergency, said climate models “have many shortcomings and are not remotely plausible as global policy tools”. As Chapman explains, models use super-computing power to amplify the interrelationships between unmeasurable forces to boost small incremental CO2 heating. The model forecasts are then presented as ‘primary evidence’ of a climate crisis.